return to homepage

return to updates

BULLET CLUSTER INTERPRETATION

go to PDF version

by Miles Mathis

Abstract: Here I do a close analysis of two papers by Clowe et al claiming to find dark matter in the bullet cluster, showing that they do not prove what they claim to prove. I then show that my charge field fills the hole in the paper and in the cluster perfectly, by matching the 19 to 1 mass ratio found by the mainstream researchers. I do this with simple math, and derive the ratio from equations that are both current and longstanding. This shows that dark matter is not non-baryonic, it is photonic. It is the charge field.

In 2004, Douglas Clowe et al published an 8-page paper* on the bullet cluster at IOP claiming to have proven beyond any doubt the presence of dark matter in the cluster, and thereby the universe. Soon after they published a 5-page letter [A DIRECT EMPIRICAL PROOF OF THE EXISTENCE OF DARK MATTER]** compressing and extending the earlier paper. Since then these papers have been used to assert the death of all MOND's and MOG's (modified Newtonian dynamics or gravities) and to trumpet the victory of dark matter. Even the opposition has been deflated by this paper, and many or most MOND supporters have backtracked, trying to save face by presenting new papers that show a mixture of dark matter and modifications. This in itself is a sign of the times, since it proves that neither side is capable of close analysis. The Clowe paper is extremely weak, as I will show below, and the fact that anyone would be cowed by it is a very bad sign for physics.

As those who have been keeping up with my papers know, I am not a supporter of MOND or MOG. I have proved, with simple equations, that both MOND and dark matter are false. So I am not here as a defender of MOND. I can show you where I stand very quickly by analyzing the first two sentences of the second paper:

We have known since 1937 that the gravitational potentials of galaxy clusters are too deep to be caused by the detected baryonic mass and a Newtonian r-2 gravitational force law (Zwicky 1937). Proposed solutions either invoke dominant quantities of non-luminous “dark matter” (Oort 1932) or alterations to either the gravitational force law (Bekenstein 2004; Brownstein & Moffat 2006) or the particles’ dynamical response to it (Milgrom 1983).

Both the first sentence of the paper and the second sentence of the paper are false, which does not bode well for the rest of it. The first sentence is true only if we do not include the charge field emitted by the “detected baryonic matter.” But if we allow that all baryonic mass emits a charge field, then this charge field must be included with any detection of matter. The standard model admits that all mass includes a charge field, so the sentence is false. To make the sentence true, we would have to make it,

Provided that we refuse to give the charge field real mass or mass equivalence, we have known since 1937 that the gravitational potentials of galaxy clusters are too deep to be caused by the detected baryonic mass and a Newtonian r-2 gravitational force law.

The second sentence is also false, since what its should say is that the only widely publicized solutions are of those two sorts. As with the two political parties, Clowe wants you to believe you only have two choices. But since my solution invokes neither dark matter nor new functions, this sentence must be false. My solution exists, therefore it has “been proposed”, therefore sentence two is false.

But we get misdirection even before we get to the body of the paper. The first sentence of the abstract is this:

We present new weak lensing observations of 1E0657-558 (z = 0.296), a unique cluster merger, that enable a direct detection of dark matter, independent of assumptions regarding the nature of the gravitational force law.

The authors are claiming a direct detection of dark matter, which means they don't know the definition of “direct detection.” At best, with loads of assumptions and interpretations, they may claim an indirect detection of something unexplainable, which they then call dark matter. But there is nothing even approaching a direct detection of anything here. Then they claim that this direct detection, which is not a direct detection, is independent of assumptions regarding gravity. What they should say is that their paper is not a MOND, which should be fairly obvious considering the title. But their assumptions regarding gravity are omnipresent. To start with, they have just said that they are using weak lensing observations, so they must be assuming that gravity causes lensing. Beyond that, they are assuming the charge field doesn't have mass, that the photon is virtual, that gravity is absent at the quantum level, and a host of other things. This means that their assumptions are all mainstream assumptions, as of 2006, but it does not mean that their theory is independent of assumptions.

The last sentence of the abstract is also strange:

An 8σ significance spatial offset of the center of the total mass from the center of the baryonic mass peaks cannot be explained with an alteration of the gravitational force law, and thus proves that the majority of the matter in the system is unseen.

First of all, the paper doesn't show a spatial offset of the center of mass from the baryonic center of mass, it shows an offset from the plasma. The baryonic center of mass is roughly taken as the plasma center of mass only by claiming that the stellar matter is only 1-2% of the total, and therefore around 10% of the plasma total. Therefore the plasma stands for the baryonic center of mass here, within 10%. But the authors never prove that the stellar matter is 1-2% of the total. All they do is make one citation. Then they say this proves that the majority of the matter in the system is unseen. But even if all their assumptions and citations are 100% true and proved, this last proof of “unseen matter” does not prove dark matter. Why? Because I also believe that the majority of the mass in the system is unseen, but I do not believe in their dark matter. Notice that if I am correct, and if this “unseen matter” is my charge field, it would confirm the last sentence of their abstract. The charge field is dark and outweighs the baryonic field by 19 times (see below and my other papers). Therefore the majority of the matter in the system is unseen. The authors can prove their thesis regarding unseen matter, and still fail to justify their title.

And that brings us to the title, which is even more bold than the abstract:

A DIRECT EMPIRICAL PROOF OF THE EXISTENCE OF DARK MATTER

We actually have neither a direct detection nor a direct empirical proof. To be clear, a direct empirical proof would be something like a unicorn in a box, as a proof of unicorns. What this paper offers is nothing even remotely resembling a unicorn in a box. What it offers is an extraordinary display of fudging, massaging, and finessing of equations and data (and the English language) to achieve a propaganda coup, one that will no doubt win someone a Nobel Prize in a few years. The paper is written expressly to impress the sort of spongy careerists who sit on these committees, so the future is almost predictable.

Yes, this paper of Clowe et al is another piece of unsubtle political posturing. It is attempting to hypnotize you from the first word, to convince you that “we have known since 1937” that this is not solvable with normal matter, and that the solution must be one of two. Only if you happen to be one of the multitudes of contemporary physicists who don't know what words mean—who don't know the difference between “direct” and “indirect” for example—will their paper convince you.

In paragraph 2 of the introduction, we are told this:

However, during a merger of two clusters, galaxies behave as collisionless particles, while the fluid-like X-ray emitting intracluster plasma experiences ram pressure. Therefore, in the course of a cluster collision, galaxies spatially decouple from the plasma.

That is the first major unproved assumption here, unclothed and standing in full daylight. In fact, it would be true only if the dark matter hypothesis were true, so the argument has already gone circular. The point of the paper is to show that the data proves the dark matter hypothesis, but we have an unproved dark matter assumption here in the second paragraph. Circular. Galaxies won't behave as collisionless particles unless they are mainly composed of so-called non-baryonic matter, in which case they behave as ghosts. But if galaxies are mainly composed of normal matter, they won't act as collisionless particles. They will act as fields with real densities. Therefore, if it is proven that plasma decouples from matter, it must be because of density variations, not the fact that galaxies are mainly collisionless. It is known that plasmas do have different densities than normal matter, so data showing decoupling is not proof of dark matter.

In paragraph 4, we find this:

In the absence of dark matter, the gravitational potential will trace the dominant visible matter component, which is the X-ray plasma. If, on the other hand, the mass is indeed dominated by collisionless dark matter, the potential will trace the distribution of that component, which is expected to be spatially coincident with the collisionless galaxies.

This is nothing but (semi)clever misdirection. The authors tell us that the X-ray plasma is the dominant visible matter component, but that has never been proven. Just because some physicists published a paper on it does not mean it has been proven. These authors treat a citation as proof. We are told that the “stellar component” of a galaxy is 1-2% [Kochanek et al, 2003], while the plasma component is 5-15% [Vikhlinin et all, 2006], but those percentages are only based on models. What models? Dark matter models! So these authors are using as evidence for their dark matter model percentages from previous papers that also used dark matter models. Again, circular. Before dark matter came along as a theory, it was thought that a large percentage of galactic mass was stellar. We can only get the percentage down to 1-2% by assuming undetectable WIMPs of some sort, so that the bulk of the mass is near-zero density and near-zero interacting. Again, these authors are assuming what they are trying to prove.

The only true assumption here is that the gravitational potential will trace the most massive component of the galaxies. But if the dominant matter component is not the X-ray plasma, then neither in the presence of dark matter nor the absence of it will the potential trace it. In which case, we have no “on the other hand.” Both the classical and the dark matter hypothesis would predict the same thing, and tracing the potential will not help us. These authors have again created two possibilities, making you think they are the only two. The paper rides on these stacked assumptions, and they have already become wobbly by paragraph 4.

We can see this just by looking at the percentage they cite for plasma of around 10%. Well, if the plasma is 10% of the mass, it can't be dominant, can it? They say that in the absence of dark matter, the potential will trace the plasma, but that is just a strawman. It isn't true. In models that lack dark matter, such as mine, the potential would not be expected to trace the plasma. This is because no logical model would give the majority of mass to the plasma. Plasma has to be created by the normal matter, via the charge field, so logically it cannot outweigh it. Plasma has to be ionized, and ionization takes energy. You cannot propose a total transfer of energy from a less energetic field to a more energetic one. Therefore, plasma must be energized by a more massive field—the matter field.

We see this again in the authors' unstated method of figuring plasma mass. The logical way to do it is this: from the luminosity and X-ray temperature you get an electron density; from the electron density you get a density of hydrogen and helium (and the other elements if you like). But then you must multiply by a volume. The authors are assuming the plasma fills the whole volume, but this assumption is false. The plasma is much more likely to be in the form of an envelope or shell, so that the mass estimate would fall dramatically. In addition, the authors are assuming that X-ray luminosity traces the mass. This may be true in the optical but it is not true in X-rays. The luminosity traces the electron density and temperature only. There could be a huge mass of gas in the outskirts beyond what you see in the X-ray image, if the temperature of the gas is too cool to be detected by Chandra. So their X-ray centroids are centroids of X-ray brightness only. Therefore they cannot logically claim that these brightness centroids are mass centroids. In fact, the X-ray luminosity goes as electron density squared, so you only need to drop the density a factor of 10 for the X-ray emission to drop below your instrument detection threshold. The drop in temperature will multiply this effect. But it is even worse than that, since the authors use an X-ray temperature/mass relation derived from nicely behaved single clusters which are in equilibrium (which still assumes the whole volume is filled). The bullet cluster is as far away from equilibrium as you can get for these systems. All these assumptions lie hidden beneath the threshold of the visible paper.

Correcting these mistakes may either increase or decrease the total mass of the plasma, depending on the losses and gains. No doubt the authors will rush to coopt these comments, using only the ones that could cause a gain; but the point is that the authors don't know how to do the fundamental physics here. Which gives a reader little confidence in their claims to have proven anything.

Another major misdirection is dividing “normal matter” into stellar matter and plasma, especially when you remember that stars are plasma, according to the current model. The dark matter theorists want you to think they are different here, but they are different only in that they have been decoupled due to different densities. In fact, they are both baryonic and both include the charge field, so we don't have three categories, we only have two. We have matter—which includes plasma—and we have charge. But these authors are using a divide-and-conquer method here, assuring you continue to look toward dark matter and away from charge. They want you to think we need new matter to fill a gap, and if you remember that stellar matter and plasma are basically the same thing, you might remember the charge field, which ionizes both.

But these problems are just the beginning. We haven't even made it to part 2 (Methodology and Data). There we are shown a map of the potential and told how it is generated. It is generated by assuming that gravitational lensing has been proved, and then applying the formula for weak gravitational lensing to the raw data in order to stretch the image. Problem here is that gravitational lensing is just a mathematical theory, with a few poor data examples. In a long paper, I have shown that we have much stronger data against gravitational lensing than we have for it. The examples the mainstream always lead with, those of the Twin Quasar and Einstein's Cross, are actually very speculative, not to say illogical, and they don't come near to proving the theory. Since the two strongest candidates are so weak, we don't even need to look at weaker candidates. And the data against lensing is ubiquitous, since the dark sky we see every night is that data. If the theory of lensing were true, every object in the sky would be haloed and rehaloed to infinity. All the lenses would act as scattering, and we wouldn't have a dark spot in the sky.

Therefore, we may say, with no threat of contradiction, that the authors are using an unproved assumption to generate an image. Lensing is a hypothesis with absolutely no strong data to back it up. But even if the lensing theory is true, there is no indication it would create a reduced shear across the interior of massive collections of objects. The original idea was that concentrated masses or collections of mass would create bends around them, and the idea that they would create bends on light passing through them was only tacked on later. For this idea, we have even less evidence. One might say that the data is zero and must remain zero, since to accept the idea, we have to accept that the image we see is not correct.

Just think about it for a moment. How would you prove that one way or the other? What these theorists have done is simply assume that if light is bent by going around large collections of mass, it must also be bent going through them. Therefore it distorts them. Therefore all images are distorted. But in that case, all data can be used as proof for and no data can be used as proof against. Let me ask you this: Could you know if all images were NOT distorted by lensing? No, once you assume all images are distorted, you simply apply your math to them to calculate the amount of distortion. The theory is unfalsifiable, since once again the theory assumes what it is expected to prove.

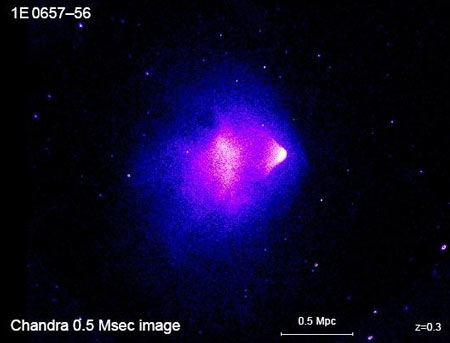

In fact, I would say the data from the bullet cluster is as clear evidence as we could hope for against the lensing hypothesis, especially the internal lensing hypothesis. We can see that the luminosity map C already roughly matches the potential (and matches it precisely in width), so we have no need for lensing calculations. Since we can determine a width without lensing from the luminosity map, the luminosity map must not be affected by lensing. And if the width is not affected by lensing, then our original image is not distorted. And if our original image is not distorted, then lensing has been disproven. But let us study the images even more closely, to show this more clearly.

You can see for yourself that we don't need the lensing hypothesis to generate a potential map. We can generate it straight from the luminosity map. In the first paper, Clowe et al published figure C here (“the luminosity distribution of galaxies with the same B-I colors as the primary cluster's red sequence”), which they ditched in the second paper. They ditched it because it follows the potential map so closely, as you see. All the “stretching” away from the Chandra X-ray photo has already been done, and we don't even need the lensing hypothesis. Just based on luminosity, we can see a big mismatch from the plasma field. Which means the potential is roughly following the luminosity, not the dark matter. As width, the separation of potentials is explained by the luminosity map, and we only need to explain the change in shape. The potential centroids don't follow the shape of the luminous patches, but I explain that below, without dark matter.

Doesn't anyone find it curious that dark matter is supposed to be in places where we find the most luminosity? I would say it is very convenient that the dark matter map matches the luminosity map. Dark matter is dark, is it not? So dark matter concentrations would be unlikely to follow luminosity, correct? In our own galaxy, the theorists put the dark matter in a halo. But here, the dark matter is said to follow the luminosity? Wouldn't it be far more logical to propose that shining matter follows luminosity? And that therefore stellar matter (with its cohorts) must outweigh the plasma?

The mainstream deny this because they cannot get normal matter to provide enough mass, but they are simply missing the charge field. The charge field is the missing cohort. And of course the charge field would be expected to follow the potential of normal matter, since it is emitted by normal matter. Where you have more luminosity, you will naturally have more charge.

The mainstream might claim that dark matter follows normal matter, since the two are linked somehow. But they have failed so far to provide that mechanical or theoretical link. With charge, the link is already there. We don't have to come up with new theory or new particles or new kinds of matter. We already know that charge exists, so all we have to do is give it a non-virtual form.

But this sort of thinking is entirely too sensible for the mainstream. In paragraph 2, part 2, we find this:

Because it is not feasible to measure redshifts for all galaxies in the field, we select likely background galaxies using magnitude and color cuts.

“We select likely background galaxies.” Based on what? Based on whether they fit your model or not? Even supposing that lensing were true, this entire method is slipshod in the extreme. A map of field potential like these authors are creating is a fairly precise thing, but the data we have for distant galaxies is not precise at all. Both the distances and redshifts are highly speculative, which means our knowledge of the light being bent is also highly speculative. Doubtless the likely background galaxies are chosen either because they are easier to read or because they fit the desired outcome. But neither method of selection is scientific, since it precludes obtaining an objective average. You will say that the choice is made on magnitude and color cuts, since that is what we are told, but that is only visible magnitude. We can't know which of these blurs is most or least obstructed by forward matter, so we can't know real magnitudes. This is especially true of background galaxies, which, if they were not obstructed, would not be affected by lensing. They have to be obstructed to be lensed, and then they are chosen for this lensing math because they are unobstructed. Illogical!

In part three, this is partially admitted:

The assumed mass-to-light ratio is highly uncertain (can vary between 0.5 and 3) and depends on the history of recent star formation of the galaxies in the apertures; however even in the case of an extreme deviation, the X-ray plasma is still the dominant baryonic component in all of the apertures. The quoted errors are only the errors on measuring the luminosity and do not include the uncertainty in the assumed mass-to-light ratio. Because we did not apply a color selection to the galaxies, these measurements are an upper limit on the stellar mass as they include contributions from galaxies not affiliated with the

cluster.

The authors admit that the quoted errors do not include the high uncertainty in mass-to-light ratios, and they seem to think that admitting it in the body of the text is enough to make you look away. “Oh, they seem honest, so why double-check them?” But even here, they do not admit all the uncertainty. They don't even admit a fraction of it. To accept their figures, you must assume that they know how to measure such things as distance and redshift and energy and temperature almost perfectly. This despite the fact that astronomers were forced to admit just a few years ago that they were off by at least 15% in all simple distance measurements. As I showed in my paper on stellar twinkling, such errors are catastrophic when you come to objects like the bullet cluster. An error in distance of 15% can snowball in equations, to the point that you end up with something like an 180,000% error in final numbers. This is because all your other parameters and measurements and assumptions are also affected, so the error enters your theory in as many as 20 different ways. There I was estimating an error in the age of the universe, and this bullet cluster problem is slightly less complex than that, but small errors can still overwhelm you very fast. Even if we assume that the error in distance has been made better instead of worse in the last couple of years, we still cannot assume it has been made zero. If we were that wrong up to 2008, what makes you think we are completely right in 2010?

That was regarding distance, but the same is true of other parameters. Let us take temperature. Recently top astronomers [Tucker et al, 1998] have claimed a temperature of 17keV for 1E 0657-56. Although this temperature was later shown to be way too high [Yaqoob 1999‡], and the original method of temperature calculation was proved to be badly garbled, the higher figure has continued to be quoted. It is quoted by one of the authors of this bullet-cluster paper, Markevitch. Basically, authors quote any number they like, and get to pick and choose numbers that fit their theories. If a paper says something they don't like, they ignore it or mis-cite it.

As with temperature, so with mass-to-light ratios. The authors tell us that the mass-to-light ratio is highly uncertain, and can vary between .5 and 3, but those numbers are just estimates. If the ratio is uncertain, then the maximum variance is also uncertain, and these numbers of .5 and 3 are not firm at all. Since we are still arguing about what galaxies are made of, and have no firm data on non-baryonic matter (despite this claim of “direct detection”), it is absurd to claim that we know about the “history of recent star formation.” The stack of assumptions is beginning to reach to the Moon, and therefore the claim that “X-ray plasma is still the dominant baryonic component in all the apertures” must be taken with a grain of salt. This quoted paragraph all but admits that the numbers are squishy, and since the entire paper depends on the plasma being dominant, the paper must be hanging by the slenderest of threads.

All these manipulations the authors are making are both speculative and squishy. Why not just use the luminosity map? Why ditch it and try to manufacture a dark matter map from lensing? The luminosity map is direct data that doesn't have to be massaged. It requires many fewer assumptions and manipulations to work from the luminosity map. Why hide it in the second paper?

We see this again in the implied claim that we have enough resolution to solve this problem as the authors solve it. But since we have a sigma of only 3.4 here (first paper), we have a signal that is only 3 times the standard deviation of the noise. When I asked an X-ray astronomer for the raw data on this problem, he said this: “Unfortunately the raw X-ray data for clusters is not meaningful in the sense that the counts per pixel are zero or small, so nobody publishes the raw data.” This means that the data is amenable to almost infinite amounts of pushing. The authors push again when they claim to have increased sigma to 8 in the second paper. So much improvement in so little time! This improvement is not explained, beyond our being informed that the authors used three more optical sets. But if even if sigma really went to 8, that is a measure of signal to noise, not of resolution. A blurry photo with almost no resolution will remain blurry no matter how long you expose it. These astrophysicists always talk sigma but never admit that the resolution is near zero. With only a few photons, a stronger signal is nearly insignificant. You could expose the image for a year and it would still have no resolution. To improve clarity, these images are smoothed with a 2 arcsec Gaussian, but once you do that you are no longer working with real data. It is like taking a photo with one dot per pixel into photoshop and trying to improve it by hitting sharpen. No matter what method you use to sharpen, the outcome will be a falsified blur.

None of this would matter so much if we were working straight from the luminosity map, since in that case we wouldn't have nearly as many depth-of-field assumptions. But when we start calculating lensing, we are looking at even more distant fuzzballs with even less resolution. Calculating bends in that situation is akin to witchcraft, and we can only assume that the authors must have followed the luminosity map as a guide. This may be another reason they jettisoned it in the second paper.

In the same breath that they are telling us of new optical sets, they claim that changing the cosmology will not affect the relative masses of the galaxies, but that is also false. It is only true if all masses are at the same distance, but is not true in 3D. A different cosmology will or may change depth of field in various ways, which will change a hatful of assumptions, especially in the lensing part of the theory. They just assume that the different cosmology is not very different.

In part 4, Discussion, the authors admit that another big problem with their method is that the lensing map is two-dimensional, and this “raises the possibility that structures seen in the map are caused by physically unrelated masses along the line-of-sight.” To answer this, the authors give us only a probability from blank surveys, indicating that this is highly unlikely. But the bullet cluster itself is highly unlikely, if we look a blank surveys, so this means nothing. The authors also dismiss the filament proposal with more dishonest talk of probability. This is improper use of probability math. None of the various scenarios are likely, and we don't choose between them based on probabilities. We did not roll the dice and decide to look in the direction of the bullet cluster. We chose it based on its specialness, so the fact that it is special cannot be dismissed as a low probability.

The entire Discussion section is wildly dishonest, as it dismisses other possible interpretations with soundbite analysis and airy citations. For instance, the authors cite Markevitch et al, 2002,† as proof of X-ray plasma temperatures, despite the fact that Markevitch purposely mis-cites papers there. In that paper, Markevitch cites Yaqoob 1999 in support of his claim that temperatures reach 17keV, but if you take that link, you find Yaqoob showing temperatures of around 12keV. Since Markevitch is an author of both bullet cluster papers, we must assume that the authors don't know how to calculate temperatures or make correct citations.

All of this is worth mentioning as an example of the scruples of these authors. The form of their argument should be a big clue as to its content, which is why I have taken the time to analyze both the form and the content. Both show clearly that we are being misdirected. The photographs we have aren't that difficult to read, and are amazingly easy to explain using normal matter, which may be why these authors have to go to such agonizing extremes to keep you from seeing that. The luminosity spread, combined with the Chandra image, are all we need. We already see the decoupling, and only have to explain why the potential would follow the luminosity instead of the plasma. It is simply because the old assumptions were correct: the majority of the mass of the galaxy is stellar mass and its cohorts. The plasma is a secondary player in the unified field, as in the solar system. The plasma is created by normal mass and its charge, so it cannot outweigh it.

Yes, the charge field is the answer to all these manufactured conundrums. The charge field is the cohort of the stellar mass that explains everything. As I have shown in many papers, charge has mass. By the current equations, the statcoulomb is equivalent to mass and the Coulomb is equivalent to mass/second. This means that photons must have mass. They are not point particles and they are not massless. The have no rest mass only because they are never at rest. But they do have energy, which is moving mass, which must be included in totals.

Those who follow my own papers will say that I have presented simple equations that show that the charge field outweighs the normal matter field, so how can I say that the X-ray plasma may not outweigh normal matter in galaxies? Isn't that a contradiction? No. First of all, my charge field, which does generate or energize the plasma, is not equivalent to it. That is the first thing you must understand. A plasma is a plasma of ions. It is these ions the mainstream are weighing when they weigh a plasma, so they are still failing to weigh my charge field and the photons that compose it. The charge field is photons; the plasma is ions. Second, my charge field adds to the weight of the galaxy, but the gravitational potential won't necessarily follow it exactly. This is because the charge field is uncontained, while the matter field is semi-contained. Because of the size of the constituent particles, matter tends to orbit or condense, and this can be called semi-containment. Photons “orbit” only to a tiny degree, with enough bend only to keep them mainly in the galaxy. But photons do not condense or maintain true orbits, which is precisely why they do not constitute matter. They are too small to orbit or condense. This is why we see distant galaxies: some of the photons escape. Because they create such weak “curves”, they add very little to existing potentials. Photons do not mainly work gravitationally, they work electromagnetically. They are the E/M part of the unified field, not the gravitational part. So, although they do have mass, they do not act like other matter.

This is why the luminosity map follows the potential map roughly but not precisely. We don't need lensing to generate a potential map, we need to correct the luminosity map with a charge map. Since charge acts differently than either matter or plasma, we need a third map. If we could map charge, we would find it following baryonic matter, but acting independently in some ways. Because it moves in larger curves, it runs both ahead and behind normal matter, in larger vortexes. And because it is emitted by the spin of matter, it tends to move at right angles to it, in the largest structures. This is precisely what we are seeing in the larger cluster on the left side of figure C. We see the luminosity, and therefore the stellar matter, in a barbell shape running roughly to 1 and 7 o'clock. [Notice that the highest centroid of potential is at the center of the barbell, indicating a match of center-of-luminosity and center-of-gravity.] But the total mass of that cluster runs more east to west, with a strong arm reaching out to 10 o'clock, perpendicular to the barbell. We may assume that the arm that would be reaching out to 4 o'clock is suppressed by the influence of the other cluster. We are seeing the charge field here, working at right angles to the matter field. The same thing goes for the right cluster, where the matter field is oblong mainly side to side, while the total mass is oblong up and down.

This is another reason the dark matter hypothesis is so absurd: they are seeking weakly interacting particles when they already have them. Photons are weakly interacting gravity particles, since their interaction is E/M not gravitational. They are so small and fast they dodge most of the gravity “field”. Gravity curves them very very little. Since curvature is a measure of potential, photons have very little potential. The mainstream is looking for massive weakly interacting particles (WIMP's), when what they should be looking for is huge numbers of very small weakly interacting particles. The added mass can be gotten either way, of course. And we already have huge numbers of weakly interacting particles: the charge photons.

You will say, “If that is true, and photons add little to the potential, then how can you propose them as mass to fill the potential gap? The original problem of galactic rotation was that we don't have enough mass to cause the velocities we see. If photons don't add potential, how can they affect velocities?” They affect velocities via the E/M field, not the gravity field. But since the E/M field is already part of the unified field, and always has been, they will affect the velocity that way. The velocity equations we have always had are UFT equations, as I have shown. Newton's equations were always UFT equations, and they always included E/M. The great thing about the charge field is that it allows us to explain planetary torques and perturbations mechanically. The old gravity field, either Newton's or Einstein's, couldn't explain forces at the tangent, but charge can. This applies to all bodies in orbit, not just planets. This is how photons affect orbital velocities.

You will say, “If that is true, and the equations we have always used are unified field equations, then they should have already included photons. If they already included them, you cannot add them in now!” Yes, I can, since the historical equations were incomplete. Not wrong, just incomplete. My unified field equations—for force and velocity and so on—include a second term, which expressly includes the material presence of the charge field. Newton's equations included the forces from the charge field, but did not include the material presence of the charge field. To say it another way, Newton included the density of the charge field as its ability to move the matter field, but he did not include the density of the charge field as its ability to take up space and cause drag. Since this term goes to zero in most cases, it can be ignored. Only when we look at very large spaces or very large forces does the second term become significant. This is what is happening in the galactic rotation problem and other similar large-scale problems. This is the unified field velocity equation:

v = √[(GM0/r) – (Gmr/r)]

Where the first mass is the mass inside r and the second mass is the mass at r. Since the second term can also stand for the drag caused by the photon field, this equation also solves the galactic problems directly.

Finally, you will say, “If charge photons replace dark matter, shouldn't we see them? Why is charge dark?” Charge is mostly dark because it is mostly outside the visible spectrum. It is the same reason we don't see most photons in the E/M spectrum: they are above or below the visible. The better question is, “Why don't we detect it?” But we do, of course. Every detection we have of everything is a detection of photons, since only the photons can travel to us. So the luminosity map itself is a detection of the charge field. We just haven't yet read the E/M spectrum data in the right way. Up to now, we have only used brightness to estimate the size of the stellar field. We have not seen that we can use brightness to estimate the local photon field as well. We have gotten used to ignoring the photon field here, so we ignore it in the rest of the universe, too. We don't realize that the brightness we see is only a tiny sample of the photons that are there, so we never even try to extrapolate up and find a figure for the photon field. Because we think the photon is massless, it never even occurs to us. But I have already shown a simple way to do it, and there are others. In the paper I published before this one, I showed an extremely short method using only e, and found a charge mass inside the Bohr radius of 19 baryons per baryon. Meaning, the charge field is 19 times the matter field as a function of mass, that near to matter. This is important, since it matches the current estimate that 95% of the field is non-baryonic. This means the mainstream data is correct, and only the mainstream interpretations of that data are wrong. “Non-baryonic” turns out to be equivalent to “photonic”.

Once we recognize that the charge field is the answer to all these questions, we see that the dark matter hypothesis rests on a contradiction. To solve the problems it claims to solve, this dark matter must be non-baryonic, which means it isn't like normal matter. How is it said to be different? It is mass that is weakly interacting, which means it must be mass without density. But that is to ignore the definition of both mass and density. Mass is defined as density times volume, so you cannot propose mass that is weakly interacting in this way. Do these people mean to jettison M=DV from the textbooks? They want you to think they are remaining true to Newton (unlike their MOND enemies), but they throw out a fundamental equation from chapter one of basic physics. They claim they are theorizing “independent of assumptions regarding the nature of the gravitational force law”, and then propose mass without density. They want you to think they are proposing a new kind of matter, but they are really proposing a new kind of mass, one that is redefined as not a function of density. Just as they want the void to be nothing one instant and something the next (symmetry breaking, Dirac's field, Higgs field), and just as they want a particle to have energy one instant and none the next (virtual particles), they want mass that has extension and no extension, energy and no energy. They want non-baryons that have mass when we are creating potentials in distant galaxies, but that have no mass when they are traveling through the Earth. If these non-baryons have potential, then they MUST interact with other particles that also have potential. Otherwise the words have no meaning. The same particle cannot act as a gravitational entity in a distant galaxy and as a non-gravitational entity when flying through the Earth. These theories are flagrantly illogical.

When Bishop Berkeley was criticizing Newton's calculus proof, he called Newton's fluxions “the ghosts of departed quantities,” since they were sometimes zeroes and sometimes infinitesimals. These non-baryons of the dark matter hypothesis are the same sort of souls of departed protons, circling in haloes about galaxies like a far-flung hell of moaning spirits. Where else should these ghosts of dead particles go but into the outer regions, far from the sight and influence of good people? We may assume shattered souls of particles and anti-particles are transported here after collision, perhaps by a battery of non-baryonic angels tooting on non-baryonic trumpets. As with the hosts of Heaven and Hades, these ghosts will be weighed after the judgment, but not on the scales of the Earth.

As you now see, the photon solves all these problems, as long as we give it mass and radius. The photon, though it has mass, is not massive, so it is naturally low density. Even with a mass of 19 baryons inside the Bohr radius, it is still very low density. What density, you may ask? We can now calculate it. Since volume depends on radius, the volume inside the Bohr radius is 237,000x the proton radius. But we have 19x more mass, so the density of the charge field is 12,474x less than the matter field. And there you have it: high mass, low density, just like the WIMP people are looking for.

We may conclude by noticing that these authors and all authors of mainstream papers never do what I have done here. They never bother to say precisely what their theory is. They never get down to brass tacks and try to uncover all the mechanics involved. Their so-called discussions are more deflections or misdirections than discussions, since they mention a few of their colleagues or reviewers caveats but never bother to mention their opponents' hardest questions. They never lay it all out on the table and let you decide for yourself. They hide and massage data, and often manufacture it. They are not above dressing not-A up as A, and trying to walk it by you. You should learn to catch them at this, as I have. You should learn to see through the veils. Neither you, nor physics as a whole, will make any progress until that happens.

Postscript: Since around 2008, the mainstream people involved in the dark matter hypothesis have dropped the bullet cluster as a lead story. It may be because they realize it is a sitting duck. Joe Silk, the Savilian professor of astronomy at Oxford, admitted at a recent colloquium (Oct. 2010) that the current bullet cluster theory was "a mess". Rather than let that deter him, however, he continued to pursue his thesis, which was that supersymmetric particles may be a good candidate for dark matter. In other words, he is making up particles to fill holes that he and his colleagues made up. Problem is, supersymmetry is a theory with no data, created to explain another theory with no data, which itself was created to explain a non-mechanical theory made up from whole cloth, again with no data and no possible data. Silk even admitted that supersymmetric dark matter has 123 adjustable parameters. He stated this with some pride, as current physicists do when they are trying to wow you with the math. A math with 123 adjustable parameters is not an albatross, it is a prize goose. That is, it is a prize goose as long as it is your goose. When the opposition has a math with adjustable parameters, THAT is an albatross. Silk proved this double standard when he dismissed non-dark matter gravity theories (such a MOND) as having "too many adjustable parameters." So much for consistency as a fundament of science. He also said MOND makes no predictions. But of course dark matter theorists have never made a prediction. Dark matter is not a predictive theory, it is a stop-gap theory, as are all current theories.

Silk also admitted that we know almost nothing about dark matter, except that it is affected by gravity. But in answer to why, if galaxies are predominantly composed of dark matter, the central black hole is not dark matter, Silk replied that dark matter can't form black holes because it can't lose enough energy. Apparently dark matter is gravitational, except when we want to exempt it from acting gravitationally.

*http://iopscience.iop.org/0004-637X/604/2/596/pdf/59234.web.pdf

**http://arxiv.org/PS_cache/astro-ph/pdf/0608/0608407v1.pdf

†http://iopscience.iop.org/1538-4357/567/1/L27/fulltext

‡http://iopscience.iop.org/1538-4357/511/2/L75/pdf/985488.web.pdf